Noise threatens reliability of Clinical AI Scribes, new research shows

Dr Thomas Draper, Research Fellow

Clinical Artificial Intelligence (AI) scribes, which listen to medical consultations and automatically generate patient notes, are being hailed as a way to reduce the burden of paperwork on doctors. But new research led by the Centre of Digital Excellence (CoDE) at the University of the West of England suggests that the accuracy of these systems depends heavily on the environment in which they are used.

The study, published in BMJ Digital Health and AI, found that microphone distance and common background noises (such as heavy rain or overlapping voices) can significantly reduce the reliability of AI-generated clinical summaries.

How the study worked

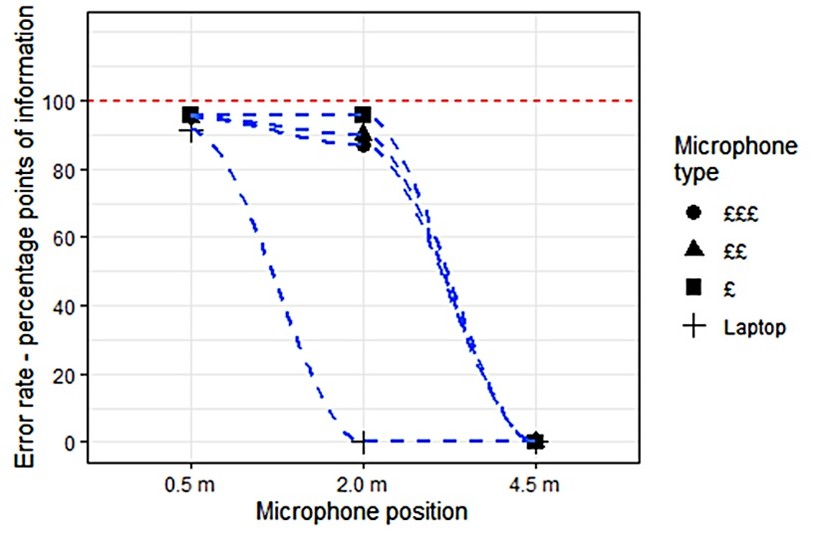

The research team recreated GP consultations in a simulated clinic, and tested a widely used commercial AI scribe under different conditions. They examined performance when the microphone was placed at different distances, ranging from half a metre to over four metres away. They also introduced everyday background noises including crying babies, construction work, heavy rain and toddler chatter. In addition, they added “informational noise,” such as casual non-medical conversations or irrelevant patient medical monologues, to see how well the system could filter these out. The outputs were then compared against “clean” summaries created under ideal audio conditions.

What we found

The most common errors were omissions, where key details were left out of the consultation notes. These included information such as when symptoms began, follow-up plans, or which medication a patient was taking. At distances over four metres, all clinical information was lost across every microphone tested. Even at two metres, omissions increased sharply for laptop microphones.

Background sounds also played a critical role. While crying babies or construction noise caused fewer problems, comprehensible speech (such as toddler chatter) and the sound of heavy rain caused major errors. By contrast, informational noise was usually handled well, with irrelevant details either filtered out or appropriately flagged as patient concerns.

Why it matters

The findings suggest that while AI scribes can cope with some distractions, they are highly vulnerable to the acoustic environment. Without careful microphone placement and vigilance from clinicians, errors could easily slip into patient records.

“AI scribes have the potential to transform clinical practice, but our study highlights that microphone distance and certain background noises can have a major impact on accuracy. Clinicians must remain vigilant and always review AI-generated summaries before adding them to patient records,” said Dr Thomas Draper, lead author and Research Fellow at CoDE.

Looking ahead

The research points to simple ways of improving performance, such as using a dedicated microphone within one metre of the consultation and avoiding reliance on laptop microphones. Clinicians should also take particular care in noisy environments, especially where overlapping speech or continuous background sounds are present. The authors stress that AI scribes should complement rather than replace clinician oversight.

The full paper, Impact of Acoustic and Informational Noise on AI-Generated Clinical Summaries, is freely available in BMJ Digital Health and AI: http://dx.doi.org/10.1136/bmjdhai-2025-000057